What are sliders?

Sliders are a novel way to fine-tune text-to-image diffusion models, such as Stable Diffusion, without requiring a dataset. Instead of training on a large set of images, sliders use self-play techniques to learn and adapt. This makes them easier to create and allows for more unique and creative results compared to traditional fine-tuning methods.

Sliders are introduced in https://arxiv.org/abs/2311.12092. The author's repository is at https://github.com/rohitgandikota/sliders and our custom fork is at https://github.com/ntc-ai/sliders-conceptmod.

What is NTC Slider Factory?

NTC Slider Factory is a platform that makes creating and discovering sliders simple and accessible. With our user-friendly interface, you can train your own custom sliders without needing expensive graphics cards or deep technical knowledge. We offer cost-effective access to powerful training infrastructure, as well as a growing library of pre-trained sliders you can use immediately.

Key benefits of NTC Slider Factory: - No specialized hardware or technical expertise needed - Intuitive UI for creating and managing sliders - Affordable training costs - Access to a diverse library of pre-trained sliders - Ongoing education and community resources

Why use sliders?

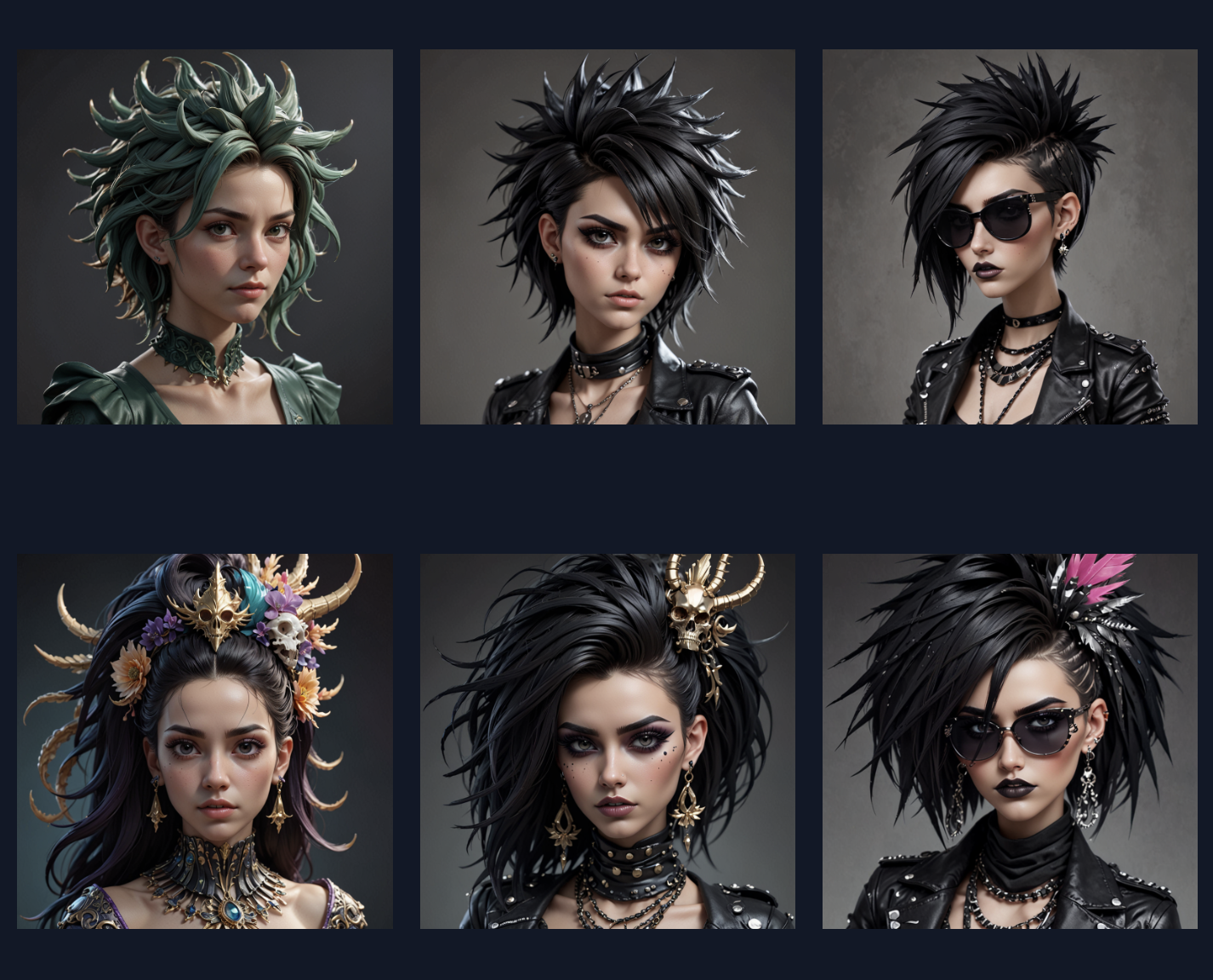

Sliders unlock new creative possibilities with your diffusion models. By encoding unique styles, aesthetics, or subject matter, they allow you to push the boundaries of what's possible with AI image generation. Sliders can be used to:

- Develop distinctive artistic styles

- Refine model output for specific domains

- Experiment with novel combinations of concepts

- Achieve niche or specialized effects

- Create persistent characters

- Prevent undesired outputs

- And more!

How do I use sliders?

Using sliders is similar to working with other fine-tuning methods like LoRA or DoRA. Once you've created or obtained a slider file, you can apply it to your diffusion model in a few lines of code:

import torch

from diffusers import StableDiffusionPipeline, EulerDiscreteScheduler

filename = "sdxl.safetensors"

pipe = StableDiffusionXLPipeline.from_single_file(filename, torch_dtype=torch.float16, variant="fp16", use_safetensors=True, local_files_only=True).to("cuda")

# blend angry and happy

pipe.load_lora_weights("sdxl_happy.safetensors", weight_name="sdxl_happy.safetensors", adapter_name="happy")

pipe.load_lora_weights("sdxl_angry.safetensors", weight_name="sdxl_angry.safetensors", adapter_name="angry")

pipe.set_adapters(["angry", "happy"], adapter_weights=[-0.5, 0.5])

# optionally set the scheduler

pipe.scheduler = EulerDiscreteScheduler.from_config(pipe.scheduler.config)

# render our image, a not angry and happy cat

prompt = "a vibrant portrait of a cat"

image = pipe(prompt, denoising_end=1, width=1024, height=1024, guidance_scale=2, num_inference_steps=10).images[0]

For a more detailed walkthrough, check out our Slider Usage Guide.